Recently, our team was invited to present at a seminar series for a client company, focusing on the vital topics of data security and privacy. While much of our discussion centered on raising awareness about current data security practices, we also took the opportunity to look forward and highlight the future challenges that organizations may face.

One of the most pressing and intriguing threats on the horizon is the use of artificial intelligence (AI) to enhance phishing attacks. As AI technology advances, so do the capabilities of malicious actors who seek to exploit these tools for social engineering attacks.

This article dives into the emerging threat of AI-augmented phishing, first examining the prevalence of social engineering vulnerabilities through various real-world examples. then the emerging threat of AI-augmented phishing, shedding light on how these sophisticated attacks work and what steps can be taken to mitigate their impact.

By understanding how these sophisticated attacks operate and what steps can be taken to mitigate their impact, organizations can better protect their sensitive data and maintain robust security protocols in an increasingly digital world.

Tales from the (En)crypt

A staggering 90% of cyberattacks involve some form of social engineering. For example, attackers often gain access to company systems by compromising employee credentials through phishing or other social engineering tactics.

The Okta Incident: A Case of Underestimated Impact

In one notable breach1, an employee’s personal Google account, used within a company context, was exploited, allowing attackers to access sensitive company information.

In October 2023, Okta, a leading identity and access management provider, experienced a significant security breach. Attackers used stolen access tokens to view files uploaded by Okta’s customers, initially impacting 134 companies.

The breach’s severity was initially underestimated, ultimately affecting 100% of users of the support system. Despite early detection by third parties, Okta delayed notifying its customers, exacerbating the potential risks.

This breach shows the need for strict rules on using personal accounts for business, regular training to spot phishing, timely communication, and a strong incident response plan to reduce damage.

The Code Spaces Catastrophe: Lessons in Access Control and Backup Security

In 2014, Code Spaces, a cloud hosting service provider, suffered a catastrophic security breach2 due to a Distributed Denial of Service (DDoS) attack followed by a ransom demand.

When the company refused to pay, the attackers accessed Code Spaces’ Amazon Web Services (AWS) account and deleted most of their data, backups, and machine configurations, leading to total data loss and company closure.

This incident illustrates the critical importance of implementing stringent access controls, regularly updating security credentials, and ensuring that backups are stored in multiple, secure locations.

HIPAA Violation: The Anthem Data Breach

In 2015, Anthem, Inc., a health insurance provider, experienced a massive data breach3 where attackers gained access to the protected health information (PHI) of nearly 79 million individuals.

This sophisticated cyberattack involved phishing emails and malware installation, allowing the attackers to steal sensitive data, including Social Security numbers and addresses.

Anthem faced significant financial penalties and legal settlements.

This breach underscores the necessity of comprehensive risk analysis and continuous employee training to prevent similar incidents.

Cybersecurity: AI-pocalypse Now

Phishing scams have always been a prevalent threat, but the integration of AI has taken these attacks to a new level, creating what is known as “supercharged phishing.” Unlike traditional phishing attacks, which often relied on easily recognizable errors and poor execution, AI-driven phishing is sophisticated, targeted, and alarmingly effective.

Let’s explore how AI is transforming phishing and what steps you can take to protect your organization.

Automated and Efficient Attacks

AI technology allows for the automation of the entire phishing process, significantly reducing the costs and time required to execute these attacks. Using large language models (LLMs), cybercriminals can generate convincing phishing emails that mimic legitimate communication styles.

This automation increases the frequency and scale of attacks, posing a substantial threat to businesses of all sizes.

Specialized AI Models

New AI tools specifically designed for phishing, such as WormGPT and FraudGPT,4 are now available on the dark web. These models can craft highly personalized and persuasive phishing messages, making it harder for individuals to distinguish between genuine and malicious communications.

The sophistication of these tools enables attackers to target specific individuals within an organization, increasing the likelihood of successful breaches.

Personalization through Behavioral Analysis

AI-driven phishing attacks5 leverage extensive data analysis to personalize messages based on the target’s online behavior and social media activity. This level of customization makes phishing emails appear more legitimate and relevant, enhancing their effectiveness.

For instance, an AI-generated email might reference recent interactions or shared interests, convincing the recipient to trust the message and take the desired action.

Real-World Example: The Deepfake Vishing Scam

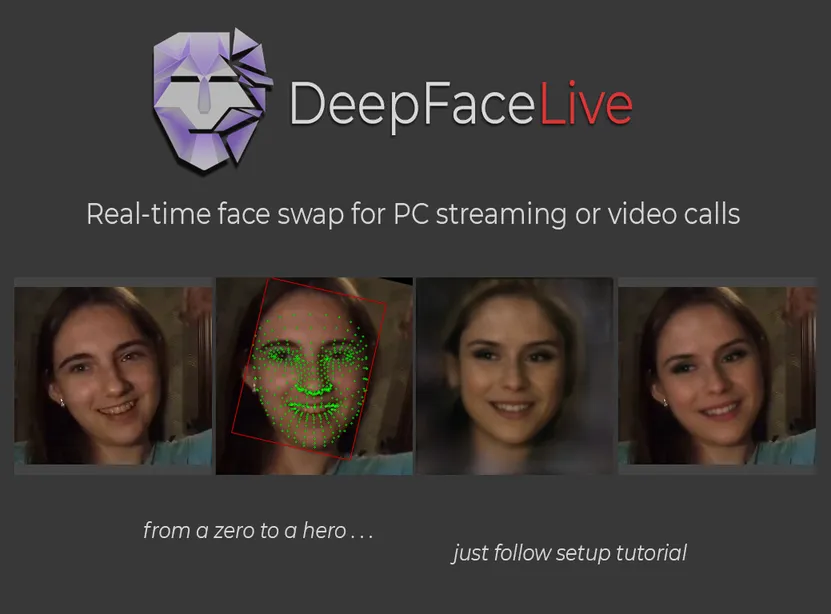

In March 2019, the CEO of a UK-based energy company received a call6 from what appeared to be his parent company’s CEO, requesting an urgent transfer of €220,000 (~$243,000). The voice on the call was a sophisticated deepfake, created using AI technology to mimic the real CEO’s accent and mannerisms.

This scam succeeded due to the realism of the deepfake voice and the psychological pressure applied through urgent instructions. Unfortunately, the funds were transferred to the attackers’ account, and efforts to recover the money were unsuccessful.

This incident highlights the growing threat of AI-driven deepfake vishing, where attackers use voice cloning technology to impersonate trusted individuals in real-time.

Strengthening Your Defenses

These emerging AI-driven threats such as supercharged phishing, deepfake vishing, and personalized phishing attacks collectively call for a significant strengthening of cybersecurity defenses.

To combat the growing threat of AI-driven social engineering attacks, it’s imperative to adopt a multi-faceted approach:

- Implement Strong Access Controls: Ensure that only authorized personnel have access to sensitive information and regularly review access permissions.

- Invest in Employee Training: Conduct regular training sessions to educate employees about the latest social engineering tactics and how to recognize and report them.

- Develop a Robust Incident Response Plan: Have a clear plan in place for responding to security breaches, including timely communication with affected parties.

- Utilize Advanced Security Tools: Leverage AI-driven security tools to monitor for suspicious activities and enforce strict authentication measures.

- Promote a Culture of Vigilance: Encourage employees to stay alert and report any suspicious activities or communications immediately.

By understanding the tactics used in AI-driven social engineering attacks and taking proactive steps to mitigate them, you can significantly enhance your organization’s cybersecurity posture.

Remember, cybersecurity is not just an IT issue—it’s a business imperative that requires the attention and commitment of the entire organization. Stay vigilant, stay informed, and protect your business from the ever-evolving landscape of cyber threats.

Contact Vertiance today for more information on how we can help your organization adopt and maintain a risk-based security program. We are here to help you eliminate uncertainty and strengthen your defenses against cyber threats.

- TechCrunch – Okta Admits hackers accessed data on all customers during recent breach ↩︎

- CSO Online – Code Spaces forced to close its doors after security incident ↩︎

- HHS – Anthem Pays OCR $16 Million in Record HIPAA Settlement Following Largest U.S. Health Data Breach in History ↩︎

- Fox News – The shadowy underbelly of AI ↩︎

- FBI – FBI Warns of Increasing Threat of Cyber Criminals Utilizing Artificial Intelligence ↩︎

- Forbes – A Voice Deepfake Was Used To Scam A CEO Out Of $243,000 ↩︎